Data maturity.

A term often bandied about in government, as we try to try to capture our collective progress along our data journey.

The appeal is understandable. Maturity afterall is a familiar concept. And considering there is a pressing need to understand how government agencies are, or aren't, making use of their data assets, why not apply it in that context?

Our government is keen to invest intelligently in data infrastructure and capability, and to do so properly requires three things:

With that in mind, relative data maturity as a measure certainly fits the bill.

Historically, our attempts to assess data maturity have not yielded much. Many of the government agencies who have taken the time to formally measure their data maturity would be hard-pressed to articulate any resulting tangible, long-lasting, and positive impacts.

The problem with our current approach is twofold:

Data maturity is an inherently subjective idea. When asked, people often describe it in terms of their 'sense' of where an organisation falls on some spectrum, and use language that is often vague and generalised.

These descriptions suggest that data maturity is an element of organisational identity, operating in the rarefied air where we characterise our high-level understanding of why an organisation exists. This is the realm of vision statements, where our view of an organisation is deliberately uncomplicated and generates an emotional response.

In contrast, our maturity assessments are often highly specific and quantitative, relying on numerical scores across a list of technical data measures. To date, the most commonly employed data maturity assessment in government is one developed by DIA which generates both current and future state scores (to one decimal place precision) for 16 distinct measurement areas.

The disconnect between the quantitative scoring models and the qualitative nature of data maturity all but ensures the assessment results will be of limited value.

In fact, while presenting themselves as dispassionate, quantitative assessment mechanisms, these models often operate in a decidedly qualitative manner. For instance, the DIA maturity tool generates its scores via a self-assessment, which (particularly in the case of future state scoring) is subjective by nature.

To make sense of their assessment results, consisting of a list of 16 precise and often data jargony measures, organisations inevitably communicate them as one number, an average of all the scores. This not only negates the value of the constituent measures, but clearly demonstrates the desire to express maturity in a simple, qualitative way.

If data maturity assessments are to be of value as a business tool, for instance informing data investment planning, they must express their results with clarity, meaning, and relevance to the executive leadership responsible for investment decisions.

With this in mind, I’ve come up with what I believe is a more viable approach to capturing data maturity. Unlike traditional assessment models, which position improving data maturity scores as business outcomes in and of themselves, this approach more appropriately situates data maturity assessment in support of business outcomes.

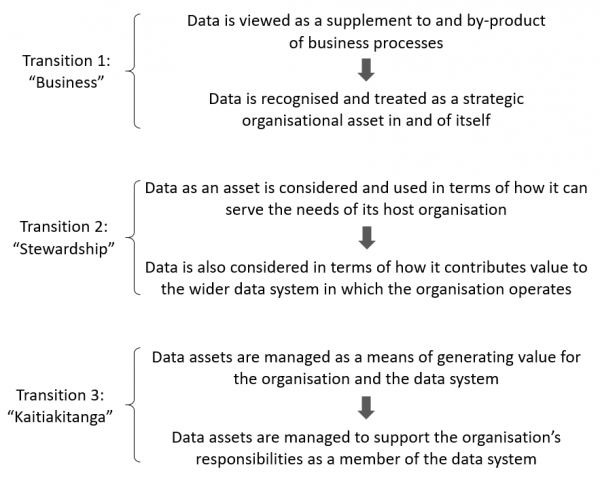

In this view, data maturity is expressed in terms of three transitions, resulting in a business, a stewardship, or a kaitiakitanga state.

As the numerical sequencing of the transitions suggests, achievement of each of these three states represents an increasing level of data maturity. Moving across these transitions, an organisation recognises its data as a strategic business asset, understands the value of its data more broadly as a data system asset, and finally moves from a purely value-based consideration of data to one that also acknowledges and emphasises the responsibilities associated with its data.

The designation of the highest level of data maturity as responsibility-based is a critical element of this approach. For instance, it provides a way to acknowledge government agencies that implement data management practice in a manner that supports the ethical use of data, recognition of data sovereignty, and delivery of obligations under Te Tiriti o Waitangi as an inherent part of their operations.

An assessment associated with this view of data maturity can logically reflect improvement as an organisation moves from state 1 to state 3. But in practice, it need not necessarily employ all three states nor always start at transition 1. The best use of this approach will be informed by initial self-reflection, which includes agreement on where data fits within the broader set of organisational strategic goals.

Based on that, it may be the case that an organisation decides that it can best assess and improve its data maturity by focussing first on transitioning to a data state above 1, or is satisfied to remain at an achieved state without immediately considering how to move on to a higher level transition.

Regardless of how it chooses to employ them, once an organisation successfully navigates any of these transitions, it can rightly claim to have lifted its data maturity. It can more readily define and describe an evolution, where data is increasingly influential, and ultimately helps that organisation to operate more responsibly as a member of the wider data system.

And by characterising data maturity in a way that resonates with senior leadership and decision-makers, this approach is likely to help break down unproductive barriers that exist between business and data-centric functions within an organisation.

The application of this view of data maturity also opens a path for the increased use of qualitative measures – the capture of narratives and case studies for instance – in relation to maturity assessment values. This is important in that qualitative measures reflect a subjective concept like data maturity much more effectively than quantitative measures. And they do so without forfeiting the potential to highlight specific improvements to be made to things like data infrastructure and capability as part of data investment planning.

In support of the wider data system, this approach can still be used to generate relative comparisons of data maturity between different organisations, in the same way that the averaged single score method is used currently. In this case, however, the assessed value of states 1, 2, or 3, while providing a simple numerical value for comparison, also offers a meaningful view of the relative data maturity of each organisation.

If you'd like more information, have a question, or want to provide feedback, email datalead@stats.govt.nz.

Visit data.govt.nz for data updates, guidance, resources and news.

Photo by Mungyu Kim on Unsplash